How to test AIRC processing containers locally

Introduction

The ability to run the container locally makes debugging issues with algorithm execution faster and more convenient. In this tutorial, we will demonstrate how to download and test the AIRC processing container image created by the Developer portal on a local developer PC.

Requirements

A container runtime is required to run the docker containers locally. Note that currently only windows containers are supported.

- Windows 10 or 11: Docker desktop

- Windows server 2019 or newer: container runtime is part of the system, but needs to be enabled

Local execution of processing unit

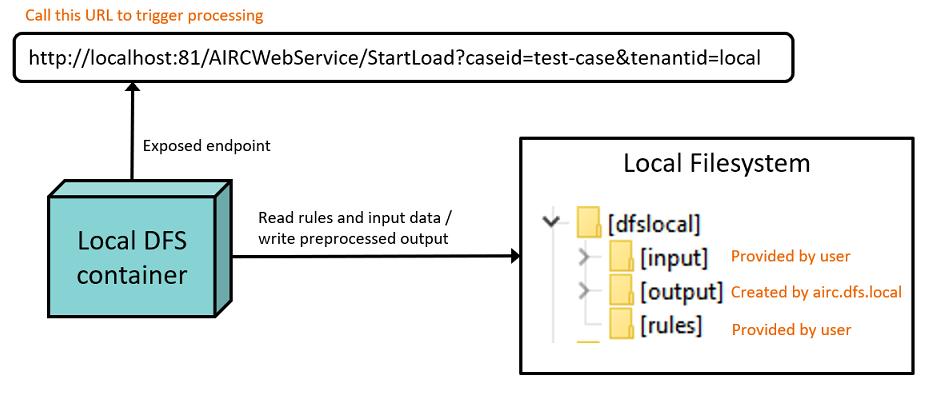

The following steps will guide you through the execution of the processing unit in the AIRC Docker container. This execution simulates the process in the AIRC cloud environment to some extent. The extension wrapper application, used as the main executable within the container, provides the option to run in 'local' mode, where the AIRC engine services are mocked. The database and blob storage, typically utilized in the cloud environment, are replaced by a simple file structure on the local computer, mapped as a volume mount to the container. This local file structure is created in advance using the local version of the Data Filtering Service, available as a container image named 'airc.dfs.local.' Therefore, the testing workflow consists of two steps:

- Data preprocessing:

- Processing unit execution:

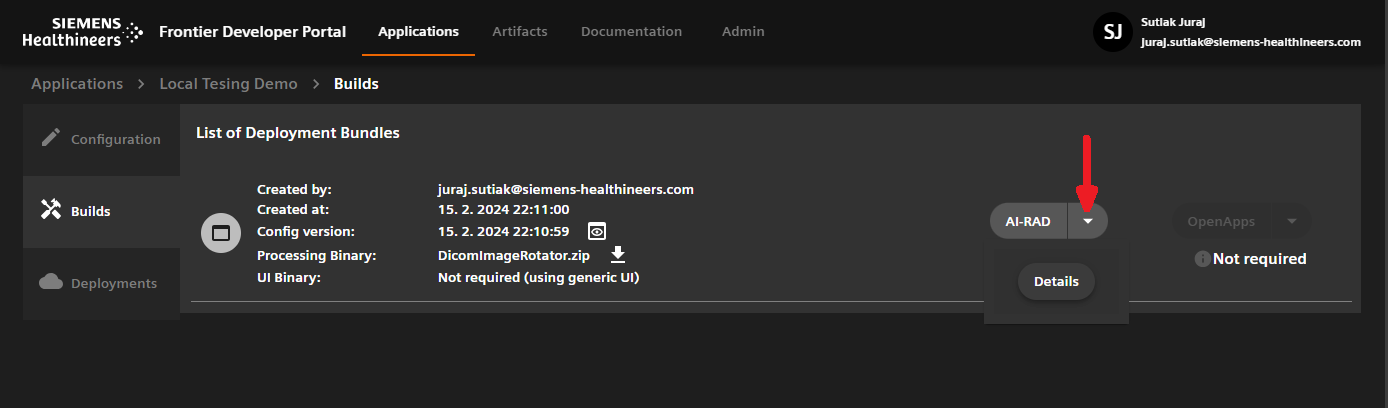

Prerequisite: Get access to the container images

The details about generated container image can be accessed in Developer portal in the "Builds" section:

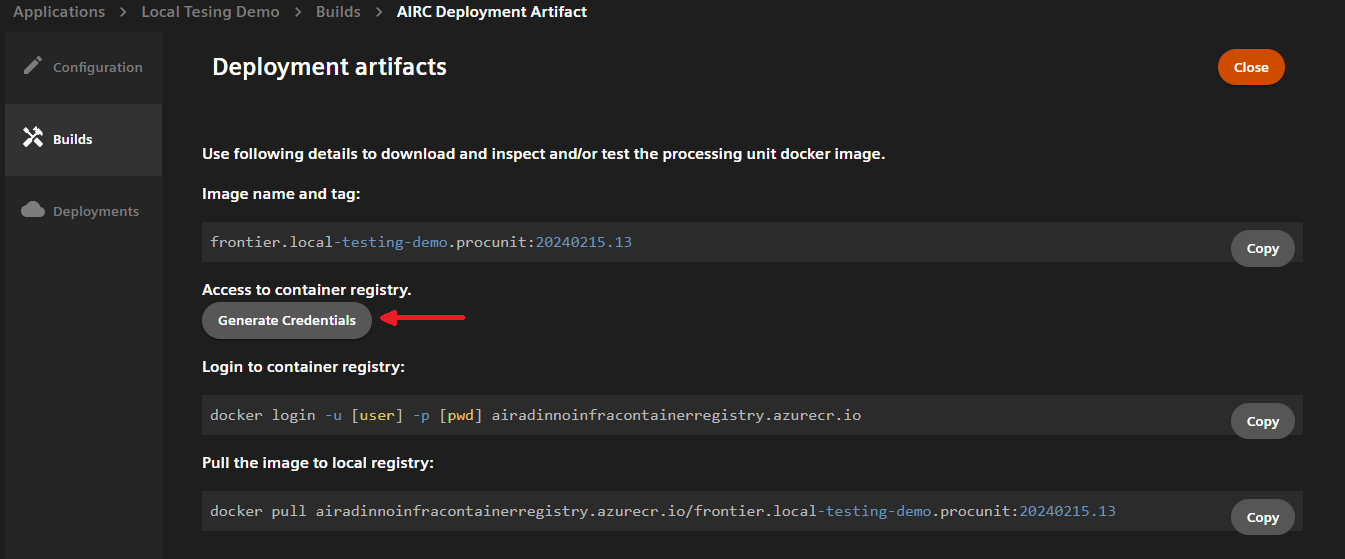

In the deployment artifacts page you can see the information about the name of the container image and a button for generating access credentials to the container registry. Click the button to generated the credentials and copy store them locally. They are not stored by Developer Portal.

When the credentials are created, try to login to the docker registry and pull the container image to the local cache using the commands shown in the deployment artifacts page.

The credentials will also grand you the access to airc.dfs.local container image, which is used for data preprocessing.

Step 1: Data preparation

- Create a folder on a local filesystem. In this example we will use folder

c:\dfslocal. - Create a subfolder

c:\dfslocal\inputand copy the input DICOM images there. You can use any folder structure, the files will be read through all sub-folders recursively. - Create a subfolder

c:\dfslocal\rules. Navigate to AIRC configuration section of your application in the developer portal and save the configuration of the routing rules to a filerules.jsoninsidec:\dfslocal\rulesdirectory. The root element of the JSON should beClinicalExtension. Alternatively, you can download a simplified routing rules version which will load all the series from the input without filtering: rules.json. - Start the local DFS container:

docker run -e AIRC_DFS_LOCAL_PORT=80 -p 0.0.0.0:81:80/tcp -v "c:\dfslocal:c:\dfslocal" --memory 4096m --name local_dfs --rm -it airadinnoinfracontainerregistry.azurecr.io/airc.dfs.local:20231208.4

This command will pull the airc.dfs.local image first, if it is not in the local cache yet.

- Trigger preprocessing by invoking the following request (you can paste the URL to a web browser, too). You can use any string for the

caseidparameter and the string "local" for thetenantidparameter.

curl "http://localhost:81/AIRCWebService/StartLoad?caseid=test-case&tenantid=local"

After processing is finished, the folder c:\dfslocal\output will be created with the preprocessed input data.

- Stop the local DFS container (we named the container 'local_dfs'):

docker stop local_dfs

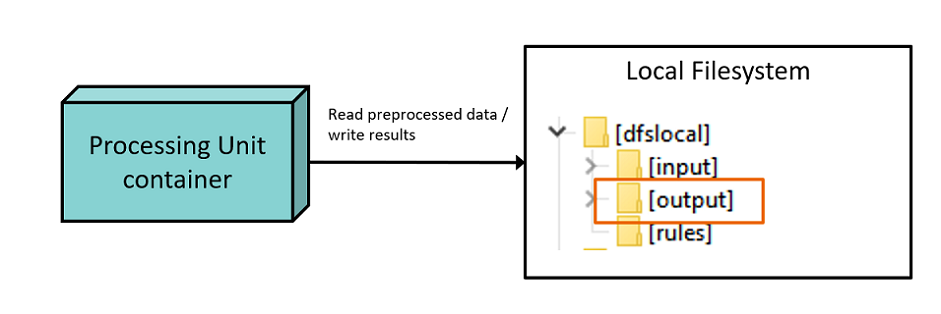

Step 2 Processing Unit Execution

- Run the processing unit container. Replace the container image name and tag with your own. Note that we pass the

caseidfrom previous step as a first command-line parameter. We also map thec:\dfslocal\outputcreated in previous step to a folder inside container -c\dataand pass this path as a second command-line parameter. The entrypoint used here isAIRC.WrapperExtension.exewhich is the name of the application we use for wrapping the uploaded algorithm.

docker run -v "c:\dfslocal\output:c:\data" --memory 8000m --name processing_unit -it --rm --entrypoint AIRC.WrapperExtension.exe airadinnoinfracontainerregistry.azurecr.io/[conainer-image-name] test-case c:\data

After successful execution, the result DICOM series will appear in c:\dfslocal\output\blobs\case-local-test-case\localExtensionInvocationId\Results. If the intermediate directory is used, the intermediate data is stored in c:\dfslocal\output\blobs\case-local-test-case\localExtensionInvocationId\CaseStorage.